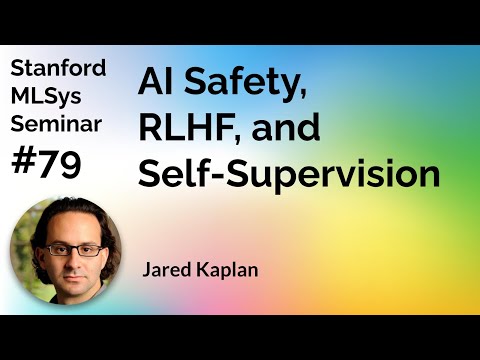

AI Safety, RLHF, and Self-Supervision – Jared Kaplan | Stanford MLSys #79

Episode 79 of the Stanford MLSys Seminar “Foundation Models Limited Series”!

Speaker: Jared Kaplan

Title: AI Safety, RLHF, and Self-Supervision

Bio: Jared Kaplan is a co-founder of Anthropic and a professor at Johns Hopkins University. He spent the first 15 years of his career as a theoretical physicist before moving to work on AI, where his contributions include research on scaling laws in machine learning, GPT-3, Codex, and more recently AI safety work such as RLHF for helpful and harmless language assistants and Constitutional AI.

Check out our website for the schedule: http://mlsys.stanford.edu

Join our mailing list to get weekly updates: https://groups.google.com/forum/#!forum/stanford-mlsys-seminars/join