http://numer.ai/mlst

Patreon: https://www.patreon.com/mlst

Discord: https://discord.gg/ESrGqhf5CB

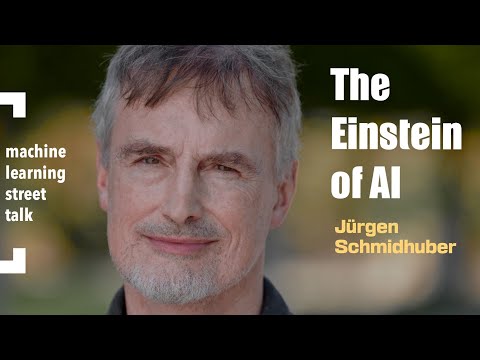

Professor Jürgen Schmidhuber, the father of artificial intelligence, joins us today. Schmidhuber discussed the history of machine learning, the current state of AI, and his career researching recursive self-improvement, artificial general intelligence and its risks.

Schmidhuber pointed out the importance of studying the history of machine learning to properly assign credit for key breakthroughs. He discussed some of the earliest machine learning algorithms. He also highlighted the foundational work of Leibniz, who discovered the chain rule that enables training of deep neural networks, and the ancient Antikythera mechanism, the first known gear-based computer.

Schmidhuber discussed limits to recursive self-improvement and artificial general intelligence, including physical constraints like the speed of light and what can be computed. He noted we have no evidence the human brain can do more than traditional computing. Schmidhuber sees humankind as a potential stepping stone to more advanced, spacefaring machine life which may have little interest in humanity. However, he believes commercial incentives point AGI development towards being beneficial and that open-source innovation can help to achieve “AI for all” symbolised by his company’s motto “AI∀”.

Schmidhuber discussed approaches he believes will lead to more general AI, including meta-learning, reinforcement learning, building predictive world models, and curiosity-driven learning. His “fast weight programming” approach from the 1990s involved one network altering another network’s connections. This was actually the first Transformer variant, now called an unnormalised linear Transformer. He also described the first GANs in 1990, to implement artificial curiosity.

Schmidhuber reflected on his career researching AI. He said his fondest memories were gaining insights that seemed to solve longstanding problems, though new challenges always arose: “then for a brief moment it looks like the greatest thing since sliced bread and and then you get excited … but then suddenly you realize, oh, it’s still not finished. Something important is missing.” Since 1985 he has worked on systems that can recursively improve themselves, constrained only by the limits of physics and computability. He believes continual progress, shaped by both competition and collaboration, will lead to increasingly advanced AI.

On AI Risk: Schmidhuber: “To me it’s indeed weird. Now there are all these letters coming out warning of the dangers of AI. And I think some of the guys who are writing these letters, they are just seeking attention because they know that AI dystopia are attracting more attention than documentaries about the benefits of AI in healthcare.”

Schmidhuber believes we should be more concerned with existing threats like nuclear weapons than speculative risks from advanced AI. He said: “As far as I can judge, all of this cannot be stopped but it can be channeled in a very natural way that is good for humankind…there is a tremendous bias towards good AI, meaning AI that is good for humans…I am much more worried about 60 year old technology that can wipe out civilization within two hours, without any AI.”

However, Schmidhuber acknowledges there are no guarantees of safety, saying “there is no proof that we will be safe forever.” He supports research on AI alignment but believes there are limits to controlling the goals of advanced AI systems.

Schmidhuber: “There are certain algorithms that we have discovered and past decades which are already optimal in a way such that you cannot really improve them any further and no self-improvement and no fancy machine will ever be able to further improve them…There are fundamental limitations of all of computation and therefore there are fundamental limitations to any AI based on computation.”

Overall, Schmidhuber believes existential risks from advanced AI are often overhyped compared to existing threats, though continued progress makes future challenges hard to predict. While control and alignment are worthy goals, he sees intrinsic limits to how much human values can ultimately be guaranteed in advanced systems. Schmidhuber is cautiously optimistic.

Note: Interview was recorded 15th June 2023.

Tweets by SchmidhuberAI

Panel: Dr. Tim Scarfe @ecsquendor / Dr. Keith Duggar @DoctorDuggar

Pod version: TBA

TOC:

[00:00:00] Intro / Numerai

[00:00:51] Show Kick Off

[00:02:24] Credit Assignment in ML

[00:12:51] XRisk

[00:20:45] First Transformer variant of 1991

[00:47:20] Which Current Approaches are Good

[00:52:42] Autonomy / Curiosity

[00:58:42] GANs of 1990

[01:11:29] OpenAI, Moats, Legislation